Cooling for GPU Clusters: Air vs Liquid vs Immersion — Real-World Numbers

By 2026, GPU clusters have become the backbone of AI training, scientific modelling and large-scale data analytics. A single rack packed with eight NVIDIA H100 or comparable accelerators can draw well above 10–12 kW, while dense AI racks increasingly exceed 80–120 kW. At these power levels, cooling is no longer a background engineering detail but a core design constraint. The choice between advanced air cooling, direct-to-chip liquid cooling and full immersion systems directly affects capital costs, operating expenditure, energy efficiency and long-term reliability. This article examines the three dominant approaches using current industry figures and real deployment data.

Advanced Air Cooling: Limits of Traditional Data Centre Design

Air cooling remains the most widespread approach in existing data centres. Modern high-performance facilities use hot aisle or cold aisle containment, high static pressure fans and rear-door heat exchangers. In 2026, a well-optimised air-cooled rack typically supports 20–30 kW without major redesign. With aggressive airflow management and in-row cooling, some operators reach 40–50 kW per rack, but this is close to the practical ceiling for standard raised-floor environments.

From an efficiency standpoint, air cooling systems usually result in a Power Usage Effectiveness (PUE) between 1.3 and 1.6 for AI-focused halls, depending on climate and free cooling availability. Fan energy consumption alone may account for 5–10% of total IT load in dense GPU deployments. As rack density grows beyond 40 kW, airflow requirements scale disproportionately, leading to higher noise levels, greater mechanical stress and uneven thermal distribution.

Capital expenditure for air cooling appears lower at first glance, particularly when reusing legacy infrastructure. However, retrofitting a facility to support 40 kW+ racks often requires reinforced power delivery, new CRAC units and containment systems. When factoring in floor space inefficiency — because lower density demands more racks — the total cost per kW can become less attractive for AI-scale installations.

Where Air Cooling Still Makes Sense

Air cooling remains viable for edge AI clusters, research labs and medium-scale training environments where rack density does not exceed 25–30 kW. In such cases, deployment speed and compatibility with existing halls can outweigh the efficiency benefits of liquid systems.

In cooler climates, free air cooling significantly reduces operational expenditure. Facilities in Northern Europe, for example, can operate with outside air economisation for much of the year, pushing effective cooling energy overhead down by 20–30% compared with warmer regions.

Another advantage is maintenance familiarity. Most data centre technicians are trained in airflow optimisation and standard HVAC servicing. There is no need for leak detection systems, coolant monitoring or specialised plumbing skills, which simplifies operational procedures.

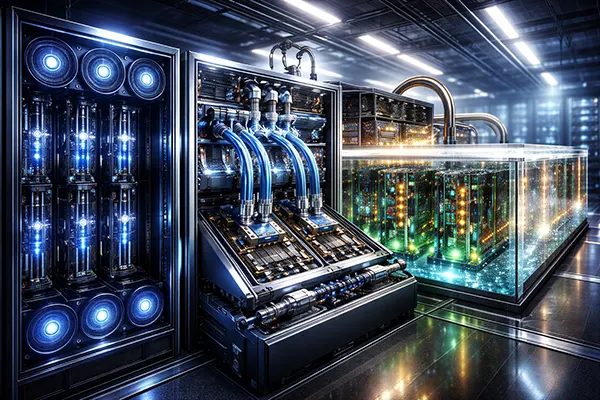

Direct-to-Chip Liquid Cooling: High-Density Efficiency

Direct-to-chip liquid cooling (DLC) has become the dominant solution for high-density AI clusters in 2026. In this configuration, cold plates are mounted directly onto GPUs and CPUs, removing heat via a closed water or water-glycol loop. Modern DLC systems comfortably support rack densities of 60–100 kW, and specialised designs exceed 120 kW per rack.

Liquid has a heat capacity roughly 3,500 times greater than air by volume, allowing far more efficient heat transport. As a result, facilities using DLC often achieve PUE values between 1.1 and 1.25 in optimised environments. Fan usage drops significantly, as server-level fans can operate at lower RPM or be partially eliminated. Cooling energy overhead may fall below 5% of IT load in well-designed systems.

From a cost perspective, DLC requires higher upfront investment. Cold plates, manifolds, CDU (Coolant Distribution Units) and leak detection infrastructure add to capital expenditure. Industry estimates in 2026 place incremental costs at approximately £700–£1,200 per kW above standard air-cooled builds. However, energy savings and higher rack density often offset this over a 3–5 year lifecycle, particularly for AI training workloads running at sustained high utilisation.

Operational Realities of Liquid Cooling

Reliability data from hyperscale operators indicates that modern quick-disconnect fittings and sealed loops have reduced leak incidents to well below 0.1% per year across large fleets. Proper commissioning and pressure testing are critical to achieving this level of reliability.

Water temperatures in DLC systems typically operate at 25–35°C on the supply side and can reach 45–50°C on return. This higher return temperature enables efficient heat reuse for district heating or industrial processes, improving overall site energy performance.

Maintenance complexity increases compared with air systems. Facilities must monitor coolant quality, prevent corrosion and manage pump redundancy. Nevertheless, the performance benefits in high-density GPU environments have made DLC the preferred option for new AI-focused data centres across Europe, North America and parts of Asia.

Immersion Cooling: Maximum Density and Thermal Stability

Immersion cooling submerges servers entirely in dielectric fluid, eliminating traditional air pathways. In 2026, single-phase immersion systems commonly support rack-equivalent densities above 100 kW, while two-phase systems can exceed 150 kW in specialised deployments. Thermal uniformity is significantly improved because components are cooled evenly from all sides.

Energy efficiency is a key advantage. Properly engineered immersion facilities can achieve PUE figures between 1.05 and 1.15. Since there are no high-speed server fans, electrical savings can reach 10–15% at the server level alone. The absence of hot spots also reduces thermal throttling, potentially improving sustained GPU performance by several percentage points under heavy AI training loads.

However, immersion introduces higher initial capital costs and operational adaptation. Dielectric fluids are expensive, and tank-based infrastructure differs substantially from conventional rack layouts. Hardware compatibility must also be validated, as not all manufacturers officially certify immersion deployments, although support has expanded significantly by 2026.

Practical Trade-Offs of Immersion Systems

Fluid management is central to long-term stability. Operators must monitor contamination levels and periodically filter or replace fluid. While modern fluids are stable and non-conductive, strict handling procedures are required to maintain performance.

Serviceability differs from traditional racks. Removing a server from an immersion tank requires draining procedures and protective equipment. Although training mitigates this issue, maintenance workflows are less intuitive for teams accustomed to air-cooled halls.

Immersion shows its strongest economic case in ultra-high-density AI clusters where space is limited and electricity costs are high. In colocation scenarios or retrofits, physical layout constraints may limit its practicality despite strong efficiency metrics.